Here are the new bike lanes Toronto is due to get in the next few months

Toronto has been seriously ramping up its cycling infrastructure in recent years, to the celebration of some and the chagrin of others, and another spate of bike lanes is being considered for implementation on streets across various areas of the city before the end of this year.

Proposed by the Infrastructure and Environment Committee and heading to City Council on Wednesday, the new lanes are just one part of a larger cycling network plan that is being bundled with new sidewalks that will complete missing links for both cyclists and pedestrians in some areas.

People trying to cancel Toronto's newest bike lanes but cyclists fire back🚲 https://t.co/09I1MOJJkc #Toronto #BikeLanes

— blogTO (@blogTO) October 26, 2023

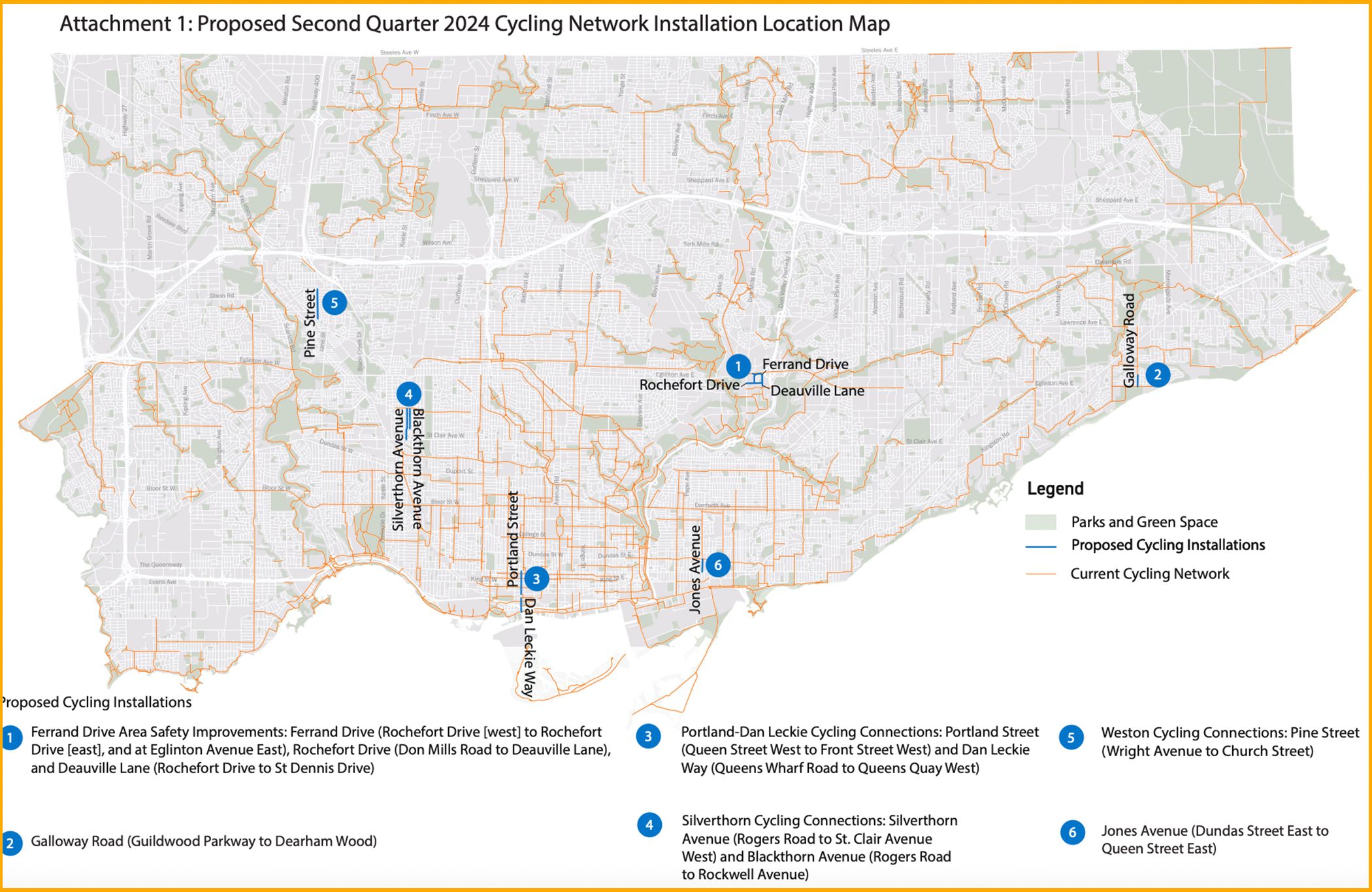

The portions of roadway that are slated for new bike lanes, pending council deliberation, are:

- Ferrand Drive from Rochefort Drive West to Rochefort Drive East and at Eglinton Avenue East (uni-directional cycle tracks and contra-flow bicycle lanes at Eglinton)

- Rochefort Drive from Don Mills Road to Deauville Lane (uni-directional cycle tracks)

- Deauville Lane from Rochefort Drive to St. Dennis Drive (uni-directional cycle tracks)

- Galloway Road from Guildwood Parkway to Dearham Wood (bicycle lanes)

- Dan Leckie Way from Queens Quay West to Queens Wharf Road (bi-directional cycle tracks)

- Portland Street from Queen Street West to Front Street West (bi-directional cycle tracks)

- Silverthorn Avenue from Rogers Road to Lane North St. Clair East Cloverdale (contra-flow bike lanes)

- Rockwell Avenue from Silverthorn Avenue (west branch) to Silverthorn Avenue (east branch) (bi-directional cycle tracks)

- Blackthorn Avenue from Rogers Road to Rockwell Avenue (contra-flow bicycle lanes)

- Pine Street (west side) from Wright Avenue to Church Street (bicycle lanes) and Pine Street (east side) from Wright Avenue to King Street (bicycle lanes)

- Richmond Street from Augusta Avenue to Portland Street (bi-directional cycle tracks)

- Jones Avenue from Queen Street to Dundas Street (uni-directional cycle tracks)

All-way compulsory stop controls are also being suggested for some of the above intersections, along with speed limit reductions and some other changes in line with the City's Vision Zero campaign to make roads safer for pedestrians and those on two wheels.

A map of the new bike infrastructure that could be in place by Q2 2024.

The same motion also requests that sidewalks be completed in the following areas in 2024:

- Edgebrook Drive (south side from [30 metres West] Bankfield Drive to Bankfield Drive)

- Fishleigh Drive (south side fronting Scarborough Heights Park)

- Mayfield Avenue (south side from Armadale Avenue to Willard Garden Parkette)

- Rannock Street (north side from Craigton Drive to [15 metres East] Rannock Street)

- Sorauren Avenue (east side of fronting 239 Sorauren Avenue).

If approved, the connections would ideally be completed by the second quarter of the year.

A Great Capture/Flickr

Latest Videos

Latest Videos

Join the conversation Load comments